Hello to all Habrahabr readers, in this article I want to share with you my experience in studying neural networks and, as a result, their implementation using the Java programming language on the Android platform.

My acquaintance with neural networks happened when the Prisma application was released. It processes any photo using neural networks and reproduces it from scratch using the selected style. Having become interested in this, I rushed to look for articles and “tutorials,” primarily on Habré. And to my great surprise, I did not find a single article that clearly and step-by-step described the algorithm for the operation of neural networks. The information was scattered and missing key points. Also, most authors rush to show code in one programming language or another without resorting to detailed explanations. Therefore, now that I have mastered neural networks quite well and found a huge amount of information from various foreign portals, I would like to share this with people in a series of publications where I will collect all the information that you will need if you are just starting to get acquainted with neural networks. In this article, I will not place a strong emphasis on Java and will explain everything with examples so that you can transfer it to any programming language you need. In subsequent articles, I will talk about my application, written for Android, which predicts the movement of stocks or currencies. In other words, everyone who wants to plunge into the world of neural networks and craves a simple and accessible presentation of information, or simply those who did not understand something and wants to improve it, are welcome under the cat. My first and most important discovery was the playlist of the American programmer Jeff Heaton, in which he explains in detail and clearly the principles of operation of neural networks and their classification. After viewing this playlist, I decided to create my own neural network, starting with the simplest example. You probably know that when you first start learning a new language, your first program will be Hello World. It's a kind of tradition. The world of machine learning also has its own Hello world and this is a neural network that solves the exclusion or (XOR) problem. The XOR table looks like this:

| a | b | c |

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

Accordingly, the neural network takes two numbers as input and must produce another number as the output - the answer. Now about the neural networks themselves.

What is a neural network?

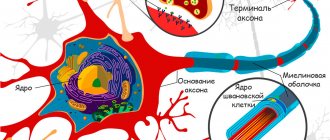

A neural network is a sequence of neurons connected by synapses. The structure of a neural network came to the world of programming straight from biology. Thanks to this structure, the machine gains the ability to analyze and even remember various information. Neural networks are also capable of not only analyzing incoming information, but also reproducing it from their memory. For those interested, be sure to watch 2 videos from TED Talks: Video 1, Video 2). In other words, a neural network is a machine interpretation of the human brain, which contains millions of neurons transmitting information in the form of electrical impulses.

Blog / How psychotherapy changes your brain (for the better)

It would seem that the process of psychotherapy is nothing special - you just tell the therapist about yourself, he nods understandingly or says something in response. However, in fact, at this moment, noticeable changes occur in the brain: the prefrontal cortex is activated, glucose metabolism increases, the concentration of neurotransmitters in the bloodstream increases - ultimately, old neural connections are rebuilt, new ones are created, and gradually you become a different person. In the best sense of the word.

Sounds a bit complicated? Let's explain now.

Imagine the situation: a child grows up with a critical mother. Criticism causes him to fear rejection - and the brain sounds the alarm: millions of neurons are activated and linked with each other in a certain sequence, which at the chemical level reflects the emotional state of the child. As we continue to encounter maternal criticism, this neural connection becomes stronger and subsequently leads to the fact that we end up with an adult who expects criticism from literally everywhere.

The person projects these same expectations onto the psychotherapist - however, he is surprised to discover that he does not want to criticize him at all. For example, a person is late for a session and enters the office, confident that he will encounter a negative reaction - but the therapist reacts gently and calmly. And every time the client’s expectations diverge from the therapist’s reaction, the therapist gains new experience - and his neural connections begin to restructure.

The relationship with the therapist generally creates an environment conducive to development and learning, which is reminiscent of the early mother-child bonding period. The client's mirror neurons read the therapist's emotions, behavior, and way of thinking and reproduce them in their own brain. In this way, the client gains a positive experience in a relationship with another person, during which he learns to think differently and respond to his own feelings and desires.

Besides. New neural connections are generated in different areas of the brain, but especially in those involved in the process of learning and thinking - the hippocampus, amygdala, and prefrontal cortex. These same parts are primarily affected by mental trauma and disorders. The neural connections responsible for behavior, emotions and thinking are disconnected, some of them are completely blocked, and what is called dissociation occurs. Therefore, those who have experienced, for example, the loss of loved ones, a disaster or childhood trauma, find it difficult to express the pain in words or remember what happened. These negative memories are locked away in the implicit memory, in other words, in the unconscious - this is how the brain protects the conscious mind from fear and anxiety and regains control over the situation. During psychotherapy, new neural connections are formed: repressed memories are associated with feelings and reach awareness - at this moment we experience a feeling of relief.

Let's say a person constantly experiences anxiety. However, he remembers practically nothing about his childhood. While working with a psychotherapist, he remembers that, as a child, he was often and for long periods separated from his mother - thus, repressed memories are associated with feelings and reach awareness. Over time, anxiety goes away, and the person feels much more confident.

In order for new neural connections to take hold, they need to be reproduced anew, otherwise the old pattern will work by default. Therefore, therapy lasts a long time: neural connections are strengthened over several weeks and months.

Language helps form and restore neural connections. It combines memory, knowledge, emotions, feelings and behavior in the mind. By speaking out our thoughts and feelings, we become more aware of them and do not slip into destructive actions.

When we translate feelings into words, we are actually translating the language of the right hemisphere into the language of the left. The right hemisphere is akin to Freud's unconscious, in which emotional, sensory, motor, and preverbal experiences are brewed. The left hemisphere is the center of logic, speech and thinking. Many psychiatric disorders, according to scientists, correlate with disruption of communication between the right and left hemispheres.

Ultimately, all psychotherapeutic methods work in such a way that the activity of different parts of the brain is equalized. For example, those who have suffered from depression experience decreased activity in their prefrontal cortex after therapy because they stop thinking difficult thoughts in their heads. In people with borderline disorder, serotonin levels in the medial prefrontal region and thalamus are restored to normal levels. In anxious clients, activity in the amygdala, the area responsible for emotions, decreases, and so on. So in a neurobiological sense, a psychotherapist can truly be called a shrink.

What are neural networks for?

Neural networks are used to solve complex problems that require analytical calculations similar to what the human brain does.

The most common applications of neural networks are: Classification

— distribution of data by parameters. For example, you are given a set of people as input and you need to decide which of them to give credit to and which not. This work can be done by a neural network, analyzing information such as age, solvency, credit history, etc.

Prediction

- the ability to predict the next step. For example, the rise or fall of shares based on the situation in the stock market.

Recognition

- Currently, the most widespread use of neural networks. Used in Google when you search for a photo or in phone cameras when it detects the position of your face and highlights it and much more.

Now, to understand how neural networks work, let's take a look at its components and their parameters.

Brain and freebie

Why do we often look for easy ways to solve problems, including “easy” money and freebies?

Because the brain was not created to work hard.

It is evolutionarily conceived that the brain needs to save resources; it tries to do this whenever possible and encourages laziness in every possible way.

The result of an experiment in which people were asked to click on several pictures on a computer screen was a revelation for me. Some pictures showed a man resting, while others showed him working. It turned out that our brain resists even clicking on pictures that depict a working person.

Photo: Myfin.by

And the origins of the craving for “freebies” and their consequences are demonstrated by one experiment. Children were offered to take one treat now or two treats later. The vast majority of those who chose to take one delicacy now failed to achieve serious financial solvency in adulthood. Scientists conducted the same test with adults, only instead of marshmallows there was money. It turned out that people who chose less money rely more on the limbic system - it is its activity that is responsible for the craving for freebies.

Is it possible to overcome this tendency?

If we are talking about a healthy brain, then yes. Thanks to the phenomenon of neuroplasticity - the ability of the brain to rebuild neural connections depending on the tasks that a person sets for himself. The ability to think critically is a skill that can and should be mastered.

Much depends on the concentration of biologically active substances. For example, a person with low dopamine levels may find it difficult to force themselves to do something and will look for easier ways.

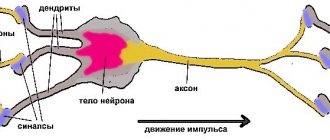

What is a neuron?

A neuron is a computational unit that receives information, performs simple calculations on it, and transmits it further. They are divided into three main types: input (blue), hidden (red) and output (green). There is also a displacement neuron and a context neuron, which we will talk about in the next article. In the case when a neural network consists of a large number of neurons, the term layer is introduced. Accordingly, there is an input layer that receives information, n hidden layers (usually no more than 3) that process it, and an output layer that outputs the result. Each neuron has 2 main parameters: input data and output data. In the case of an input neuron: input=output. In the rest, the input field contains the total information of all neurons from the previous layer, after which it is normalized using the activation function (for now let’s just imagine it as f(x)) and ends up in the output field.

Important to remember

that neurons operate with numbers in the range [0,1] or [-1,1]. But how, you ask, then process numbers that fall outside this range? At this point, the simplest answer is to divide 1 by that number. This process is called normalization and it is very often used in neural networks. More on this a little later.

Two systems

Explain how our brain works in general and how its parts influence our attitude towards money?

All of our mental activities, including making money, can be seen as a struggle between the prefrontal cortex and the limbic system.

The limbic system is our emotions, an ancient part of the brain that acts intuitively and instantly, even before we have time to realize anything. When our ancestors did not yet have complex systems of analysis, it helped them make decisions.

The limbic system of the brain includes a number of areas, including the nucleus accumbens, which is responsible for positive reward responses, and the insular cortex, the center responsible for irritation and aversion. By observing their activity, we can predict whether a person will make a risky decision. The insular cortex tries to avoid risk, while the nucleus accumbens, on the contrary, encourages such behavior.

Photo: Myfin.by

The prefrontal cortex is a new part of the brain. More “smart”, but also slower, lazy and energy-consuming. This is the highest cognitive center, which is responsible for concentration and helps keep the limbic system under control. In addition, here the brain stores the necessary information to solve current problems. Roughly speaking, this is our intelligence.

What is a synapse?

A synapse is a connection between two neurons.

Synapses have 1 parameter - weight. Thanks to it, input information changes as it is transmitted from one neuron to another. Let's say there are 3 neurons that transmit information to the next one. Then we have 3 weights corresponding to each of these neurons. For the neuron with the most weight, that information will be dominant in the next neuron (example: color mixing). In fact, the set of weights of a neural network or the weight matrix is a kind of brain of the entire system. It is thanks to these weights that the input information is processed and turned into a result. Important to remember

, that during the initialization of the neural network, the weights are placed in random order.

Hormones, money and the brain

Andrey, what else influences your attitude towards money?

The amygdala (responsible for fear and aggression), the cingulate cortex (is an error detector and is responsible for conformity), and the hippocampus (responsible for short-term memory).

The consequences of disturbances in these areas of the brain can lead to startling results. For example, scientist Antonio Damasio studied patients with destroyed prefrontal cortex and amygdala. At first he suggested that perhaps they would have an advantage in doing business because they were not afraid. However, research has shown that these patients suffer from serious problems in decision-making due to a lack of emotional response to their own mistakes. They step on the same rake over and over again.

What about hormones?

Yes, them too. To become successful, we need to be active, and for energy we need the activity of the reticular formation - this is a collection of neurons that provides us with energy. In addition, this area is responsible for the secretion of a number of hormones, for example, serotonin - the “happiness hormone”, which ensures resistance to stress. Dopamine is released when a person, from his point of view, is doing the right thing, and gives us a feeling of confidence and logic in the world around us.

Lack of dopamine leads to a feeling of meaninglessness of existence, apathy and melancholy, as a result of which we experience problems with decision making.

And oxytocin, the “trust” hormone, reduces anxiety and fear, gives harmony, confidence and strengthens human relationships. It has been proven that people with normal or higher levels of oxytocin are more likely to make deals than others.

Making decisions, including those related to money, strongly depends on mood, which is perfectly demonstrated by one experiment. Before it began, the scientists asked one group of people to watch a drama and a second group to watch a more neutral film or comedy. People who watched the drama were much more likely to reject unfair financial decisions after playing the game.

How does a neural network work?

This example shows part of a neural network, where the letters I represent the input neurons, the letter H represents the hidden neuron, and the letter w represents the weights. The formula shows that the input information is the sum of all input data multiplied by their corresponding weights. Then we will give 1 and 0 as input. Let w1=0.4 and w2 = 0.7 The input data of neuron H1 will be as follows: 1*0.4+0*0.7=0.4. Now that we have the input, we can get the output by plugging the input into the activation function (more on that later). Now that we have the output, we pass it on. And so, we repeat for all layers until we reach the output neuron. Having launched such a network for the first time, we will see that the answer is far from correct, because the network is not trained. To improve the results we will train her. But before we learn how to do this, let's introduce a few terms and properties of a neural network.

Activation function

An activation function is a way of normalizing input data (we talked about this earlier).

That is, if you have a large number at the input, passing it through the activation function, you will get an output in the range you need. There are quite a lot of activation functions, so we will consider the most basic ones: Linear, Sigmoid (Logistic) and Hyperbolic tangent. Their main differences are the range of values. Linear function

This function is almost never used, except when you need to test a neural network or pass a value without conversion.

Sigmoid

This is the most common activation function and its value range is [0,1]. This is where most of the examples on the web are shown, and is also sometimes called the logistic function. Accordingly, if in your case there are negative values (for example, stocks can go not only up, but also down), then you will need a function that also captures negative values.

Hyperbolic tangent

It only makes sense to use hyperbolic tangent when your values can be both negative and positive, since the range of the function is [-1,1]. It is not advisable to use this function only with positive values as this will significantly worsen the results of your neural network.

era

When the neural network is initialized, this value is set to 0 and has a manually set ceiling. The larger the epoch, the better trained the network and, accordingly, its result. The epoch increases each time we go through the entire set of training sets, in our case, 4 sets or 4 iterations.

It is important

not to confuse iteration with epoch and understand the sequence of their increment. First, the iteration increases n times, and then the epoch, and not vice versa. In other words, you cannot first train a neural network on only one set, then on another, and so on. You need to train each set once per era. This way, you can avoid errors in calculations.

Error

Error is a percentage that reflects the difference between the expected and received responses.

The error is formed every era and must decline. If this doesn't happen, then you are doing something wrong. The error can be calculated in different ways, but we will consider only three main methods: Mean Squared Error (hereinafter MSE), Root MSE and Arctan. There is no restriction on use like there is in the activation function, and you are free to choose any method that will give you the best results. You just have to keep in mind that each method counts errors differently. With Arctan, the error will almost always be larger, since it works on the principle: the greater the difference, the greater the error. The Root MSE will have the smallest error, so it is most common to use an MSE that maintains balance in error calculation. MSE

Root MSE

Arctan

The principle of calculating errors is the same in all cases. For each set, we count the error by subtracting the result from the ideal answer. Next, we either square it or calculate the square tangent from this difference, after which we divide the resulting number by the number of sets.